Have you ever wondered what happens the moment you type www.google.com into your browser and hit enter? In the blink of an eye, a complex page filled with links, images, and videos loads perfectly. How do the world’s largest websites handle millions of simultaneous users without a hitch? How is your data securely transmitted and directed to the correct server?

The answers lie in the three cornerstones that underpin the modern internet: proxies, reverse proxies, and load balancers. Whether you’re a backend engineer, a DevOps specialist, or just a curious mind, understanding these concepts will pull back the curtain on the fascinating world of web infrastructure. This article will use relatable analogies and a step-by-step approach to demystify these seemingly complex but logically elegant components.

Scenario 1: The Personal Bodyguard - The Forward Proxy

Let’s start with a personal scenario. Imagine you want to access an academic website, but your school’s network has restrictions. You discover the IT department offers a “proxy server” configuration. You set it up in your browser, and suddenly, all your web requests are funneled through this server. The proxy fetches the data from the target site on your behalf and delivers it to you. To the website, it looks like the request is coming from the proxy server, concealing your true identity.

In this picture, a forward proxy acts as your personal “digital bodyguard” or “proxy agent.” It primarily serves the client, creating a proxy relationship between you and the vast public internet.

Core Responsibilities & Functions:

- Access Control & Content Filtering: This is the classic use case in a corporate environment. A system administrator can deploy a forward proxy that forces all employee web traffic through it. The admin can create a “blacklist” of blocked websites (e.g., social media, entertainment) to enforce company policies. Simultaneously, the proxy can scan all incoming responses for viruses, malware, and malicious scripts, shielding the entire internal network from threats.

- Improving Speed & Saving Bandwidth: Picture this: 10 engineers at your company all want to watch the same online training video. A forward proxy will download the video from the server for the first employee and save a local copy (cache). When engineers 2 through 10 request the same video, the proxy serves it from the cache without re-fetching it from the internet. This dramatically reduces wait times and saves the company significant bandwidth.

- Bypassing Access Restrictions: As in the school example, forward proxies can help users circumvent IP blocks, regional firewalls, and other geo-restrictions to access certain resources. In the realm of personal privacy, VPNs (Virtual Private Networks) leverage the principles of forward proxies, adding encryption to provide secure and anonymous web browsing.

Summary: The forward proxy looks “outward,” acting on behalf of the client to “proxy” requests to the internet.

Scenario 2: The Versatile Receptionist - The Reverse Proxy

Now, let’s switch our view from the individual user to the provider of a service—the website or application itself. Imagine you’re the manager of a large, popular restaurant. Hundreds of customers flood in simultaneously. If every customer were to barge into the kitchen, find a chef, place an order, and check on its status, it would be a chaotic disaster.

So, you station a highly capable receptionist at the entrance. This receptionist is the sole point of contact between customers and the kitchen (the real restaurant).

- Customers (clients) don’t need to know how big the kitchen is or how many chefs (servers) there are.

- The receptionist greets customers, asks about their needs, and asks for the party size.

- The receptionist has a full overview of the restaurant’s operations, knowing which tables are free and which chefs are less busy.

- The receptionist seats customers at an appropriate table and relays their orders to the correct chef.

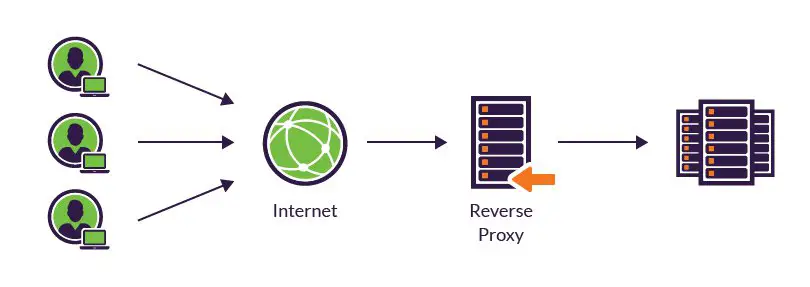

In the technical world, this “receptionist” is the reverse proxy. It plays the opposite role of a forward proxy, serving the server side as the single entry point for all client requests.

Core Responsibilities & Functions:

- Security Bastion: This is arguably its most critical role. A mature application might be backed by hundreds of real servers. Exposing them directly to the internet is like publishing every home address in a city—an invitation for disaster. The reverse proxy, as the “first line of defense,” hides the internal IPs and workings of all backend servers, shielding them from most direct internet attacks. All security policies (like a Web Application Firewall or DDoS mitigation) are deployed on the reverse proxy, providing unified protection for the entire application cluster.

- Load Balancing: This is the reverse proxy’s most famous feature. The receptionist (reverse proxy) intelligently and evenly distributes customers (network requests) among different chefs (servers). This distribution is based on the current load of each server (CPU, memory, connections), ensuring no single server is overwhelmed and crashes. This maximizes the system’s throughput and ensures high availability and high concurrency.

- SSL/TLS Termination & Encryption: In the modern web, HTTPS (encrypted transport) is standard. Handling the SSL/TLS handshake and encryption/decryption is a CPU-intensive task for the server. The reverse proxy can perform this heavy lifting at the front end. When a request arrives, the proxy completes the encrypted “handshake” and “decrypts” the traffic, then forwards the plain HTTP request to the backend application servers. These servers are freed from encryption duties and can focus on pure business logic. This process of “offloading encryption from the application to the proxy” is called “SSL termination.”

- Content Caching: Like a forward proxy, a reverse proxy can cache responses from backend servers. However, it typically caches “static resources”—files that don’t change often, such as logos, CSS, JavaScript, and product images. When a user requests these resources again, the reverse proxy can deliver them instantly from its cache, bothering the application servers no further. This reduces server load and dramatically speeds up the user experience.

Summary: The reverse proxy looks “inward,” acting on behalf of the servers to “proxy” incoming requests from the internet.

Layered Architecture: The Game of the Strong - Cloud Load Balancers and Reverse Proxies in Tandem

After understanding forward and reverse proxies, a natural question arises: “Major cloud platforms like AWS, Alibaba Cloud, and Google Cloud all offer powerful ‘Load Balancers.’ Since we have classic open-source reverse proxies like Nginx, are cloud LBs a replacement?”

The answer is: Far from it. They are perfect partners, forming the recommended layered architecture for modern, cloud-native applications.

Why a Layered Approach?

Imagine a modern, smart city.

- Layer 1: The Outer Ring Road & Toll Gates. Its job is to handle the immense traffic flowing into the city from all directions and prevent gridlock at the entrances. This role is played by the cloud platform’s Load Balancer. It sits at the edge of your Virtual Private Cloud (VPC) and is the first and only entry point for all public traffic. It operates with massive elasticity and availability, handling huge volumes of raw, unsorted external requests.

- Layer 2: The Internal Transportation Hub & Traffic Police. Once traffic is inside the city, it can’t all be funneled into the downtown area. It needs to be intelligently guided to different districts and streets. This is where a smarter, rule-aware system becomes essential. This is the reverse proxy (like Nginx) that you deploy inside your server cluster. It handles traffic that has already “entered the city” and performs fine-grained routing based on complex rules (like request URL path, user cookies, HTTP headers). For example, it can decide that requests to

/api/v1/usersall go to the User Service, while requests to/productsgo to the Product Service. This intelligent, content-based routing is something cloud LBs typically don’t offer.

This “outer highway + inner traffic police” layered architecture brings immense benefits:

- Ultimate Scalability & Elasticity: When a traffic surge hits, you can simply increase the number of cloud LB instances at the city’s “entrance” to handle the load. The “inner traffic police” (Nginx) and the “streets” (backend servers) can scale out smoothly without interfering with each other.

- Inherent Security: The cloud LB, as the public-facing entry, first shields your internal server IPs from direct scanning and probing from the public internet. The Nginx cluster then acts as a second firewall, creating a defense-in-depth architecture that significantly boosts security.

- Unparalleled Flexibility: You can scale, resize, or even dramatically change your internal architecture (e.g., splitting a monolith into microservices) silently “inside the city” without impacting the cloud LB’s configuration or the external user’s experience.

Therefore, cloud load balancers and reverse proxies work in a powerful tandem: one handles large-scale external access and disaster recovery, the other handles intelligent internal distribution and security reinforcement. This combination is the true hallmark of a modern application architecture.

From Hardware to Software: Lightweight Proxies at the Application Level

For many developers, the “proxy” they interact with isn’t a standalone service like Nginx, but something that runs directly in their code. When you run npm start (Node.js) or java -jar my-app.jar (Java), this application itself acts as a “proxy” or a gateway.

For example, using the Express.js framework in Node.js. You define different routes (e.g., app.get('/home'), app.post('/login')). All HTTP requests first hit your Express app, which decides which business logic to invoke based on the URL, then sends the processed response back to the client. In this flow, your Express app is an “application-layer proxy” between the server and the client.

How does this differ from Nginx?

- Purpose: Nginx is a standalone, high-performance software. It is a web server in its own right, capable of serving static files, as well as being a full-featured reverse proxy. Its performance is exceptionally high, especially for handling concurrent connections and static files.

- Belonging: Express.js is a web application framework for Node.js. Its core value is enabling you to quickly and easily build dynamic web services and APIs. It runs on top of the Node.js runtime.

- Performance: Nginx is written in C and optimized for high-concurrency scenarios, making it far superior to any application server for static file serving. While Express.js is powerful, it can’t match Nginx’s raw performance.

The Best Practice in the Real World: Nginx + Express

In the vast majority of production environments, the best practice is to have Nginx in the front and Express.js in the back.

- Nginx: As the reverse proxy, it receives all public requests. It handles SSL/TLS termination, serves static files, load balances requests across multiple Express.js instances (for high availability), and performs basic security filtering.

- Express.js: Focuses solely on business logic, such as database queries, calculations, and generating JSON or HTML responses.

This combination is like a General (Nginx) and a Special Forces unit (Express.js). The General plans the strategy, allocates resources, and handles perimeter defense, while the Special Forces handles the deep strikes (solving specific business problems).

The New Frontier of Identity Management: From Network Layer to Client

So far, we’ve journeyed from the macro view of server architecture to the micro-level of application code. Whether it’s a reverse proxy or a front-end application, their core job is to process “requests” (Request) by receiving, parsing, routing, and responding to them.

However, when we shift our gaze from servers to clients, and from code to user behavior, a war centered on “identity” and “isolation” has long been raging.

For platforms like Amazon Associates, the TikTok Creator Fund, and Google Ads, identifying “batch operations” and “fake traffic” is their core competency. Their algorithms have long since surpassed simple IP address detection. Instead, they analyze a holistic digital fingerprint to judge a user’s authenticity. This fingerprint includes: browser fingerprint, OS version, screen resolution, installed fonts, hardware info, and even mouse movement patterns. These combine to create a unique “digital identity” marker.

If an operator logs into and manages hundreds or thousands of social media accounts on a single physical computer using a single browser, the “digital identity” of these accounts is almost 100% identical from the platform’s perspective. The platform’s risk control system will mercilessly flag them as “associated accounts” or “marketing accounts,” leading to a mass ban of all accounts, nullifying all previous efforts. Even using traditional proxies or VPSs to change IPs doesn’t solve the fundamental issue of browser fingerprinting and environment isolation.

How can you create a separate, trusted “digital identity” for each account?

This is where professional Anti-Detect Browser technology comes in. It’s like a “digital plastic surgeon,” completely reshaping the “identity” of each independent browser instance.

FlashID Anti-Detect Browser is a leader in this field. It uses virtualization technology to create multiple isolated browser environments within your OS. Each environment possesses:

- Independent IP Address: Manually configured or automatically assigned by FlashID’s cloud phone/IP pool.

- Independent Browser Fingerprint: By simulating different browsers, OSes, and hardware parameters, it generates a unique, randomizable digital ID (Canvas, WebGL, AudioContext, Fonts, etc.).

- Independent Storage: Cookies, LocalStorage, and other data are fully isolated, so the login state of one account doesn’t affect another.

- Automation & Synchronization: With built-in RPA automation tools and window synchronization, it can perform automated operations (e.g., liking, commenting, posting) on multiple accounts according to a predefined script, dramatically freeing up human labor.

Through this, FlashID transforms your multi-account management business—whether it’s for affiliate marketing, cross-border e-commerce, social media growth, or online earning tasks—from a “high-risk black art” into a “secure, controllable, and scalable standardized operation plan.” It solves the fundamental problem of identity isolation at the lowest level, allowing you to focus on business growth without the anxiety of account security issues.

Frequently Asked Questions (FAQ)

Q: Are VPNs and forward proxies the same thing?

A: Not exactly, but a VPN is the most popular and important type of forward proxy. A forward proxy is the technical concept (requesting and forwarding on behalf of a client), while a VPN adds encryption on top, specifically for establishing a secure private tunnel over a public network to protect data privacy and integrity.

Q: If my company is small with only a few employees, is it still necessary to use a forward proxy?

A: It depends on your needs. If you just want to prevent people from watching videos or playing games during work hours, a corporate firewall or DNS filtering might be sufficient. However, if you need to manage web behavior, audit logs, or use caching to optimize bandwidth (especially for multinational companies), a forward proxy (like Squid) will still offer significant management and efficiency benefits.

Q: Is a reverse proxy the same as an API Gateway?

A: They are conceptually very similar; an API Gateway can be seen as a reverse proxy that is purpose-built for microservices with more powerful features. A traditional reverse proxy might focus on load balancing and request forwarding, while an API Gateway adds fine-grained management of the API lifecycle, such as authentication, rate limiting/circuit breaking, service monitoring, and protocol translation, making it a core component of a microservices architecture.

Q: Why is an API Gateway (or reverse proxy) mandatory in a microservices architecture?

A: In a microservices architecture, an application is broken down into dozens or even hundreds of small, independent services. If clients were to communicate directly with each microservice, the client would become incredibly complex, needing to know the address and protocol of every service. The API Gateway acts as a single entry point, hiding this complexity from the client. The client only needs to talk to the gateway, which handles routing the request to the correct microservice. This greatly simplifies client development and improves the overall maintainability of the system.

Q: Will using Nginx as a reverse proxy slow down my website?

A: In the vast majority of cases, no, it will actually make it faster. While there is a tiny latency from adding a middle layer, features like static file caching and SSL termination significantly reduce the load on backend application servers. The application’s response time is the primary source of user-perceived latency. A cache hit can return files instantly, and SSL termination frees up server CPU resources. Therefore, the overall impact on user experience and backend performance is a net positive.

Q: Why are cloud load balancers so much more expensive than Nginx?

A: Because a cloud LB provides a fully managed service. You are paying for the massive infrastructure, reliability guarantees, global deployment, and auto-scaling capabilities behind it, without needing to manage it yourself. Nginx is software; you have to buy your own servers, install and configure it, and be responsible for its high availability and troubleshooting. The cost models and value propositions are completely different, offering a choice from “I’ll use it myself” to “I’ll use it conveniently and worry-free.”

Q: For individual developers, is learning Nginx really that important? Won’t it be replaced by new technologies soon?

A: Yes, it’s very important, and it won’t become obsolete anytime soon. Nginx is the “standard answer” for solving high-performance web server problems. It embodies a deep understanding of networking, operating systems, and concurrent programming. This mindset is universal. Even if another software displaces it in the market, the architectural philosophy of layering, caching, and load balancing will remain the bedrock of web development. Learning it means learning a core methodology for solving problems.

Q: My automation scripts run on a server. Do I need FlashID for that?

A: If your scripts need to operate multiple online accounts (web or app), the answer is almost certainly yes. Server scripts typically have no GUI, while FlashID provides a way for a server-side application to programmatically control and manage those “isolated” and “disguised” browser instances. It’s like giving your automation a “multi-identity intelligent glove,” allowing it to safely handle tasks with different identities without directly operating on the server and causing account association.

Q: What’s the difference between FlashID’s “Cloud Phone” feature and its Fingerprint Browser?

A: They are a perfect combination that solves problems for different platforms.

- FlashID Fingerprint Browser: Mainly solves multi-account management for the PC (Web) side, creating isolated browser environments for each account.

- FlashID Cloud Phone: A complete Android operating system running in the cloud, primarily used for multi-account management on the mobile (Android App) side. For example, if you need to run 10 Douyin accounts simultaneously, you can log into 10 different Apps on separate cloud phones, with each App running in its own isolated environment.

Q: We are a small 10-person team doing social media marketing. Can we afford a professional tool like FlashID?

A: Whether a tool is “affordable” depends on how much cost it saves and how much value it creates. For a small team, human labor is the biggest expense. If you spend 5 hours a day manually logging in and managing 20 accounts, what is the monthly labor cost for that? FlashID’s window sync and RPA automation features can reduce that 5-hour task to minutes, freeing your team from repetitive labor to focus on content creation and strategy. The value this brings far outweighs the tool’s cost itself. In reality, this is an “investment” that helps you make money.

You May Also Like